|

|

Message boards :

Number crunching :

New work Discussion

Message board moderation

Previous · 1 . . . 40 · 41 · 42 · 43 · 44 · 45 · 46 . . . 91 · Next

| Author | Message |

|---|---|

|

Send message Joined: 18 Feb 17 Posts: 81 Credit: 12,990,289 RAC: 6,855 |

And on my i7-9700 (which has eight full cores), it checkpoints at 23 minutes. But that is again with limiting the N216 to running on only four cores. The other four cores are on TN-Grid, which seems to be an easy project for this purpose. I vaguely remember discussion of Rosetta eating up l3 cache as well, but can't find the discussion anywhere. Is this still true today and should I be limiting it alongside the n216 and n144? |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

I vaguely remember discussion of Rosetta eating up l3 cache as well, but can't find the discussion anywhere. Good question. If you look, I think you will find that I initiated that subject on Rosetta. The answer is that insofar as I can tell, Rosetta works OK with CPDN, though at the moment I like TN-Grid even better. But the "cache" issue is a bit tricky. It seems to be not just the size of the cache, or else I could run a lot more N216 on my Ryzen 3600 than my Ryzen 2600, for example. Maybe it is how the cache is used, or even a question of the L2 cache rather than the L3 cache. At any rate, you ultimately have to try it out. I don't see much problem with the N144 though. |

|

Send message Joined: 31 Aug 04 Posts: 32 Credit: 9,526,696 RAC: 109,831 |

@Wolfman1360 I vaguely remember discussion of Rosetta eating up l3 cache as well, but can't find the discussion anywhere. Jim1348 has referred to local threads where this has come up; if you look in the threads about UK Met Office HadAM4 at N216 resolution and UK Met Office HadAM4 at N144 resolution you'll find several mentions of L3 cache bashing (especially in the N216 thread, but in this message in the N144 thread I actually replied to one of your posts, talking about workload mixes (and again in this message)... Jim1348 (and others) had some good contributions in those threads too. I don't recall many explicit references to Rosetta, but WCG MIP1 (which uses Rosetta) got some dishonourable mentions... You may also have seen (or even participated in) threads about MIP1 at WCG -- because of the model construction it uses, the rule of thumb is that one MIP1 per 4 or 5 MB of L3 cache! I haven't got time to track those down at the moment - sorry! For what it's worth, if you run MIP1 alongside N216 you'll see the same sort of hit as if running extra N216 tasks; N144 is nowhere near as bad! Cheers - Al. [Edited to fix a broken link, then to fix a typo I'd missed!] |

|

Send message Joined: 18 Feb 17 Posts: 81 Credit: 12,990,289 RAC: 6,855 |

@Wolfman1360 Thanks for all of these. So far I am seeming to be doing okay, but I may have bitten off a little more than I can chew. I have an old Dual Opteron plugging away at 3 N216 - I figure a month that they are actually worked on is better than a month of sitting there with nothing grabbing them. I am exaggerating, of course - it shouldn't take quite that long since it is a dedicated cruncher, but who knows. I tend to stay away from MIP at WCG and have recently been crunching Asteroids at home alongside CPDN and Rosetta, though I do have one machine running TN grid and it seems to be doing fine as well. My RAC has drastically decreased but should be raising soon enough after playing with the config for CPDN. I am still being very conservative since I'd rather not have computing errors, as has happened a few times already on my Ryzen 1700. |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4342 Credit: 16,499,590 RAC: 5,672 |

My RAC has drastically decreased but should be raising soon enough after playing with the config for CPDN. I am not sure where CPDN sits in the tables for credit for time spent crunching. I know it isn't at the top but I suspect there are probably projects below it as well. |

|

Send message Joined: 9 Dec 05 Posts: 111 Credit: 12,038,780 RAC: 1,393 |

My RAC has drastically decreased but should be raising soon enough after playing with the config for CPDN. This comparison https://boinc.netsoft-online.com/e107_plugins/boinc/get_cpcs.php would suggest that CPDN gives less credits per CPU second compared to just about any other project. Probably it doesn't list all projects and includes projects using GPUs as well. At least it is missing the comparison between LHC and CPDN which are both CPU only projects that I participate in.

|

|

Send message Joined: 15 Feb 06 Posts: 137 Credit: 33,452,399 RAC: 5,451 |

Any indications (perhaps test batches?) of new Windows work in the foreseeable future? My new Ryzen is getting hungry. Currently it is chewing on two Linux tasks via VMPlayer and LinuxMint, but they seem to be slow going. |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4342 Credit: 16,499,590 RAC: 5,672 |

The only thing recently in testing was the openIFS type tasks which are the 64bit Linux tasks but even they do not as far as I know herald new work soon. That said, I have said things like that before and then work has appeared. In the same way, there have been times when I said new work has been on the way and it has been a loooong time coming. |

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

There are 4 researchers using Windows: Pacific North West Mexico (Central America and South America) Korea ANZ All of these are probably still waiting for enough of the thousands of models they issued late last year to be returned, so that they can anaylise the results. |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4342 Credit: 16,499,590 RAC: 5,672 |

There are 4 researchers using Windows: Those batches all between 73 and 79% success. 26-20% in progress and 1% hard fails. I don't know if the percentage needed to get good results varies from batch to batch depending on how many more they put out compared with what is needed? |

|

Send message Joined: 15 Feb 06 Posts: 137 Credit: 33,452,399 RAC: 5,451 |

Thank you Dave and Les. I appreciate your (ever) helpful replies. I guess I need to persist with the VMPlayer/Mint computations, now that I have more cores available. However, CPDN does seem to have a lot less tasks and active users these days. The WCG Africa Rainfall Project seems mighty slow in getting going too. Perhaps we need a UK Rainfall project with so many suffering flooding just now! |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4342 Credit: 16,499,590 RAC: 5,672 |

Thank you Dave and Les. I appreciate your (ever) helpful replies. The Africa Rainfall Project I think just doesn't have enough tasks to go round. I run it on this box which is a bit underpowered for the N216 tasks. I get one or two a week. Edit: Those are the only tasks I run from WCG. |

|

Send message Joined: 15 Oct 06 Posts: 5 Credit: 6,909,924 RAC: 296 |

Hi. I have not had any new work for over a month. That's the longest I have had no work for over 10 years. cant they work out a more equitable way of distribution. Colin |

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

Supply and demand. 10s of thousands of Windows computers, and only a few thousand tasks. The window of opportunity for getting work seems to be half an hour to an hour or so, for the individual batches of about 3,000. If a computer isn't asking for work in that time period, then it misses out. And the researchers don't really care which computer does the work, as long as they get their results. |

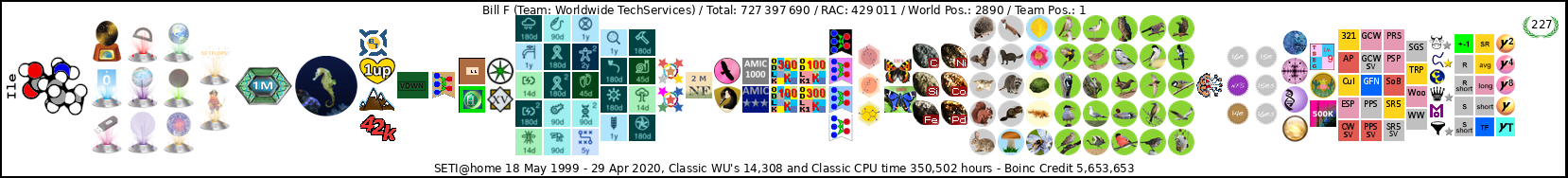

Bill F Bill FSend message Joined: 17 Jan 09 Posts: 120 Credit: 1,423,953 RAC: 2,439 |

Yes as long as they get "their results".... of course if the 3000 WU's were spread 2 per system across the available Windows systems the researchers would get their 3000 WU's back faster than waiting on fewer systems with huge queued stacks of WU's waiting on long due dates. IMHO Bill F In October 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

But how to achieve this? Aye, there's the rub. |

|

Send message Joined: 18 Jul 13 Posts: 438 Credit: 24,515,124 RAC: 1,691 |

I still believe one way to go is to shorten WU's deadline. There is not so much output of completed windows tasks per 24h compared to tasks in progress. Linux boxes though currently fewer send back higher % tasks than window boxes relative to tasks in progress. This might suggest that even if a user is not hoarding, still tasks may be at rest due to other projects priority. Edit: And yes there are whole model categories both Linux & Win, that haven't received ready tasks recently despite queued tasks in progress. (sure there are ghost WUs as well) |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4342 Credit: 16,499,590 RAC: 5,672 |

I still believe one way to go is to shorten WU's deadline. There is not so much output of completed windows tasks per 24h compared to tasks in progress. Linux boxes though currently fewer send back higher % tasks than window boxes relative to tasks in progress. This might suggest that even if a user is not hoarding, still tasks may be at rest due to other projects priority. I agree that shorter deadlines would be a good idea. The argument against it is the scheduling problems it creates for those who run multiple projects but to me that is a small price to pay. Edit:My E5400 @ 2.70GHz, which must be one of the slowest computers still able to crunch the longest tasks will finish an N216 in under 6 months even when only used when i am at the computer. Cutting the deadline back to that rather than the 11 months when the task was sent would for me be the least we could do. I will post something on the BOINC boards to see if there are likely to be many objections from those who also run lots of much shorter tasks not that I consider those objections should be a bar to cutting the deadline. |

|

Send message Joined: 5 Aug 04 Posts: 1056 Credit: 16,520,943 RAC: 1,212 |

Edit:My E5400 @ 2.70GHz, which must be one of the slowest computers still able to crunch the longest tasks will finish an N216 in under 6 months even when only used when i am at the computer. Cutting the deadline back to that rather than the 11 months when the task was sent would for me be the least we could do. Mine is slower than yours. GenuineIntel Intel(R) Xeon(R) CPU E5-2603 0 @ 1.80GHz [Family 6 Model 45 Stepping 7] Number of processors 4 Memory 15.5 GB Cache 10240 KB 1,963,447.89 1,860,666.00 27,115.14 UK Met Office HadAM4 at N216 resolution v8.52 i686-pc-linux-gnu

|

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

I will post something on the BOINC boards to see if there are likely to be many objections from those who also run lots of much shorter tasks not that I consider those objections should be a bar to cutting the deadline. (1) Don't ask or you will get a lot of objections. (2) Just do it. (3) Place the whiners on an ignore list. (4) Save the world. (5) Or at least watch it go in more detail. |

©2024 climateprediction.net