|

|

Message boards :

Number crunching :

New work Discussion

Message board moderation

Previous · 1 . . . 42 · 43 · 44 · 45 · 46 · 47 · 48 . . . 91 · Next

| Author | Message |

|---|---|

|

Send message Joined: 6 Oct 06 Posts: 204 Credit: 7,608,986 RAC: 0 |

Yes as long as they get "their results".... of course if the 3000 WU's were spread 2 per system across the available Windows systems the researchers would get their 3000 WU's back faster than waiting on fewer systems with huge queued stacks of WU's waiting on long due dates. I do not agree with this statement " huge queued stacks of WU's waiting on long due dates". Long due dates besides the point, how many cores a machine has is also not much of a deciding point. Store at least ___days work is set at ten and store additional work is also set at ten days of work maximum. So, how many WU's a machine gets is still a self-limiting factor. I have a twelve thread machine which gets twenty-four WU's max. They report back pretty much at the expected time, within one month. So, where exactly are these ' huge queued stacks of WU's waiting on long due dates" sitting and sitting they are somewhere. In the old day's we used to squirrel away WU's on floppies or alternative media. Is this still going on? Then there are crashed hard drives which take WU's with them to the grave but they still get reported. |

|

Send message Joined: 28 Jul 19 Posts: 147 Credit: 12,830,559 RAC: 228 |

Yes as long as they get "their results".... of course if the 3000 WU's were spread 2 per system across the available Windows systems the researchers would get their 3000 WU's back faster than waiting on fewer systems with huge queued stacks of WU's waiting on long due dates. So you have 12 WUs your working on and 12 queued and the results will be back in a month. How much better if you have 12 WUs and someone else has 12 WUs and the results get back in a fortnight. The queued stacks of WUs tend to be systems where they have, say, 12 cores, download 24 WUs but then only allow 2 WUs to run concurrently alongside their other projects. |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4389 Credit: 16,820,679 RAC: 5,944 |

Yes as long as they get "their results".... of course if the 3000 WU's were spread 2 per system across the available Windows systems the researchers would get their 3000 WU's back faster than waiting on fewer systems with huge queued stacks of WU's waiting on long due dates. The real problem is machines that are only switched on for a couple of hours a day and grab lots of tasks. We still see tasks returning which take over a year to be completed! I for one don't really mind if a task takes two weeks or a month to complete. But a year may be after the researcher's deadline for submitting their PhD! |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

So, where exactly are these ' huge queued stacks of WU's waiting on long due dates" sitting and sitting they are somewhere. In the old day's we used to squirrel away WU's on floppies or alternative media. Is this still going on? I see a lot of suspends in the results files. It leads to a lot of errors, and lengthens the return time even for the ones that survive. I assume that they are being done on laptops, which is the wrong place to do them I believe. Better a reasonable time limit of 60 days (or 30 is OK with me). |

JIM JIMSend message Joined: 31 Dec 07 Posts: 1152 Credit: 22,140,554 RAC: 418 |

[ I assume that they are being done on laptops, which is the wrong place to do them I believe. I STRONGLY DISAGREE! I have been running CPDN (almost exclusively) for more than 10 years, since the days of the BBC experiment. I have over 20,000,000 credits. All of this work has been run exclusively on laptops. |

|

Send message Joined: 9 Dec 05 Posts: 111 Credit: 12,112,609 RAC: 1,304 |

Really don't understand the concern about "no posting" with a low RAC. Good that this has been taken care of. Boinc servers have had as a default rule to not allow to post in forums unless you have RAC > 1. That was to prevent junk posts from users who are not interested to do their bit for the project.

|

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4389 Credit: 16,820,679 RAC: 5,944 |

Good that this has been taken care of. Boinc servers have had as a default rule to not allow to post in forums unless you have RAC > 1. That was to prevent junk posts from users who are not interested to do their bit for the project. I am not sure if CPDN has ever had this restriction since I started. I know it is the default in the BOINC server code but because so many people had trouble installing the 32bit libs in Linux it didn't make sense to stop people posting globally. |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

I STRONGLY DISAGREE! I have been running CPDN (almost exclusively) for more than 10 years, since the days of the BBC experiment. I have over 20,000,000 credits. All of this work has been run exclusively on laptops. The time limit is the point, and also the error rate. If you can get past those, you can do them however you want insofar as I am concerned. But on Windows, most of the errors I see are for too many suspends. I don't think that is from the desktops. |

|

Send message Joined: 11 Dec 19 Posts: 108 Credit: 3,012,142 RAC: 0 |

I STRONGLY DISAGREE! I have been running CPDN (almost exclusively) for more than 10 years, since the days of the BBC experiment. I have over 20,000,000 credits. All of this work has been run exclusively on laptops. I think that errors on suspended tasks are far more likely if the suspended tasks are removed from RAM. This is not the default behavior for the BOINC client and must be changed by the user. If the tasks stay in RAM then I would guess (and it's purely a guess) that the error rate drops by at least an order of magnitude. To find out if most of suspension failures happen on desktops or laptops someone would really need to do a deep dive and look at the machines' specs to see if they are using CPU's designed for desktops or laptops. I think using that metric would give a margin of error of less than five percent. As far as storing work for later goes, I don't bother with it. I have always believed that a fast turn around is more important to science than a slow, steady trickle of results no matter what projects you are crunching for. No matter what is being modeled faster returns will mean a faster evolution of the models. Getting better models designed means getting real world applications faster. |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

As far as storing work for later goes, I don't bother with it. I have always believed that a fast turn around is more important to science than a slow, steady trickle of results no matter what projects you are crunching for. No matter what is being modeled faster returns will mean a faster evolution of the models. Getting better models designed means getting real world applications faster. Yes, I would think so too. I sometimes wonder why the scientists have not insisted that the deadline be shortened. This is not a time or place for tradition. We are up against the wall on time. |

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

They do know, and we've talked about it. The current solution is to simply close the batch when enough results have been returned. |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

OK, if it works for them, it works for me. I see that the current batch of wah2 sold out quickly; I got two. |

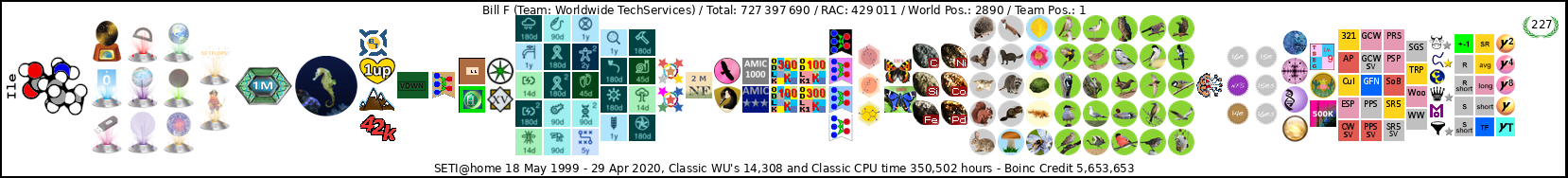

Bill F Bill FSend message Joined: 17 Jan 09 Posts: 121 Credit: 1,575,753 RAC: 2,205 |

Well I have two systems with enough power to do a good job. And I happen to have gotten 1 WU on the better of the two. It has been a very long time without any Windows releases that had any volume so I will not stir the political pot. Bill F Dallas TX In October 1969 I took an oath to support and defend the Constitution of the United States against all enemies, foreign and domestic; There was no expiration date.

|

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4389 Credit: 16,820,679 RAC: 5,944 |

I STRONGLY DISAGREE! I have been running CPDN (almost exclusively) for more than 10 years, since the days of the BBC experiment. I have over 20,000,000 credits. All of this work has been run exclusively on laptops. Two machines is hardly statistically significant but I have one desktop and one laptop, however I see no difference in the error rates between the two. I do though vary the number of cores according to time of year/temperature. The machine tells me it is still OK running all 4 cores on the laptop in summer but even if true the fan noise is excessive on the laptop so I cut it to either 75% or 50%. (two or three cores out of four.) Two cores gives about the same level of fan noise at 25C that four give at about 17C ambient. I don't know if I would get more errors running the laptop hotter or just wear it out more quickly. |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

Two machines is hardly statistically significant but I have one desktop and one laptop, however I see no difference in the error rates between the two. I look at the errors on the other machines for work units that I complete successfully. The difference is striking. |

JIM JIMSend message Joined: 31 Dec 07 Posts: 1152 Credit: 22,140,554 RAC: 418 |

Any sings of new Windows work? I've just about finished those few from 2 weeks ago. |

|

Send message Joined: 5 Sep 04 Posts: 7629 Credit: 24,240,330 RAC: 0 |

No. No talk or testing about anything. |

Dave Jackson Dave JacksonSend message Joined: 15 May 09 Posts: 4389 Credit: 16,820,679 RAC: 5,944 |

No. No talk or testing about anything. And last time there were no signs work was on the way so hints and testing work isn't always a good indicator. Sometimes it takes a long time to get from testing to main site, either just due to timetables or problems cropping up. Not always clear which even to those with access to a bit more information. |

|

Send message Joined: 6 Oct 06 Posts: 204 Credit: 7,608,986 RAC: 0 |

Yes as long as they get "their results".... of course if the 3000 WU's were spread 2 per system across the available Windows systems the researchers would get their 3000 WU's back faster than waiting on fewer systems with huge queued stacks of WU's waiting on long due dates. |

|

Send message Joined: 15 Jan 06 Posts: 637 Credit: 26,751,529 RAC: 653 |

You can of course control the size of your buffer. Setting it to the default of 0.1+0.5 days will get you fewer work units than setting it to 10 days, for example. But that is rather crude, and sometimes they won't send you anything at all. Unfortunately, the BOINC scheduler is not aware of the "app_config.xml" file, so if you set it to run only two work units, it will still download as if you were running on all 12 cores. So until BOINC gets smarter (a long process), the best way would be for CPDN to provide you a user-selectable limit of how many work units you want downloaded at a time. Several projects do that now: WCG, LHC and Cosmology that I can think of at the moment. But CPDN has been going in the direction of fewer options. They need to reverse course. |

©2024 climateprediction.net